Good thing Eskom doesn’t power ChatGPT - this is how much power the AI app uses

ChatGPT’s staggering annual electricity consumption of 17.23 terawatt-hours, equivalent to powering New York for nearly four months or China for 15 hours, highlights the growing energy demands of AI apps despite their remarkable advancements in reasoning and generative accuracy. Picture: Google Gemini

Image: Google Gemini

As the widespread use of Artificial Intelligence (AI) apps continue to grow at an astronomical rate, it’s worth noting just how much electricity ChatGPT uses on an annual basis - and it’s quite staggering how much it actually requires.

Paul Hoffman, data analyst at investing research platform BestBrokers, explains that each query on ChatGPT is estimated “to consume 18.9 watt-hours, more than 50 times the energy used by a standard Google search (0.3 Wh).”

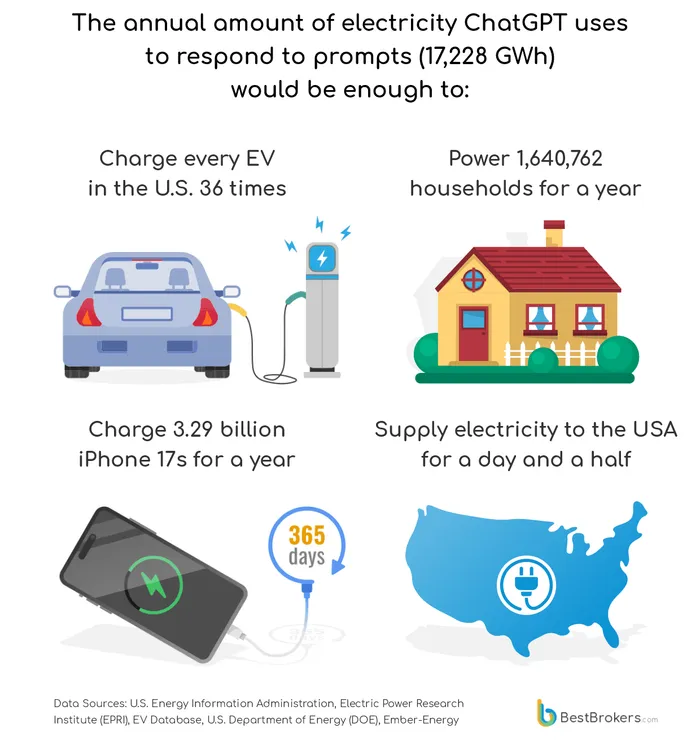

BestBrokers went on to work out the model’s total electricity usage in a year of responding to prompts, and it amounted to 17.23 terawatt-hours.

For comparison, the same amount of electricity could power New York for nearly four months.

Graphic: BestBrokers

Image: BestBrokers

ChatGPT’s Energy Consumption: Powering Nations and Electric Cars

It’s also the same amount of electricity to power the whole country of China for 15 hours. For a smaller nation like France, it would power it for a quite incredible 13 days and 11 hours.

That means that to run ChatGPT, it costs approximately $2.42 billion annually.

ChatGPT is said to run more than 2.5 billion requests per day, which requires 47.2 million kilowatt-hours of energy.

That consumption could power every house in the US for more than four and a half days.

If we’re talking about electric cars, it could fully charge around 238 million units.

“The newest iterations of ChatGPT and other frontier models are delivering remarkable gains in reasoning and generative accuracy, but their energy demands are becoming impossible to ignore,” said BestBrokers data analyst Alan Goldberg.

“Training runs for state-of-the-art systems now require tens of gigawatt-hours, and inference isn’t cheap either: each query from the most advanced models can use an order of magnitude more power than older architectures. Efficiency improvements are real, but they’re being outpaced by surging global usage and escalating model size.”